AI at Google

A snippet I found relevant to Google in Thomas H. Davenport's book The AI Advantage.

The next year, Google hired Geoffrey Hinton, the University of

Toronto researcher who had helped to revive neural networks. In 2014

Google bought DeepMind, a London-based firm with deep expertise in

deep learning. The group’s tools were used to help AlphaGo, Google’s

machine that plays the ancient game Go, beat one of the world’s best

human players. In 2016, the Google Brain organization helped Google

make a major improvement in the ability of Google Translate to do accurate

translations. By that year Google, or its parent company Alphabet,

was employing machine learning in over 2,700 different projects across

the company, including search algorithms (RankBrain), self-driving

cars (now in the Alphabet subsidiary Waymo), and medical diagnostics

(in the Calico subsidiary).18 In the Silicon Valley tradition, Google

also made its TensorFlow machine learning library available for free in

2015 as an open source project, and it has become popular among more

sophisticated companies that use AI."

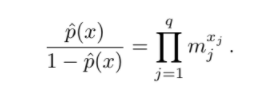

Thomas skips on some of the early history of AI at Google which traces back to another popular Silicon Valley startup and later on public company Epiphany (later renamed E.piphany due to naming rights being owned by a bible publisher in Indiana.). The machine learning team from Epiphany, which included my former E.piphany colleagues Mehran Sahami, now teaching AI at Stanford, an Sridhar Ramaswamy, later VP of all of Google Ads products. Both of them worked on (myself included) SmartASS, or Smart Ads Serving System, which was a giant probabilistic prediction model building an ads serving framework which uses logistic regression for ad click-through rate prediction. SmartASS does this very hard calculation in a special linear approximation thus making this prediction practically possible in a resonable ammount of time. Over the course of years SmartASS was refined and extended with many different subsystems ranging from topical modelling, click spam detection, fraud detection, content ad classification and many other improvements.

Comments